This evening, I thought about setting up a flickr and a twitter account. Okay, okay… I did not just think about it, I did it. What a freakin’ show…

After setting up my accounts, which was very simple, I began to link ’em to facebook and back, I uploaded photos and back… And as I did this, I began wondering. “What the hell…?! What am I doing? All the information on every site and every picture on and in each account?”

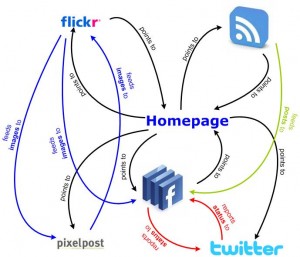

I drawed a very simple drawing and I now have a question:

Is this, what Web 2.0 is supposed to be?

What do we have?

- a homepage

- a facebook account

- a blog

- a twitter account

- a flickr account

- maybe a online diary

- maybe a guestbook

- maybe a photo blog

- maybe a gallery

- maybe a Google account (grabbing feeds and more…)

- maybe this and

- maybe that

And they all talk to each other. Am I the only one on this planet, who is very, very confused with all those sites and applications? 🙂

There’re lots of URL un-shorteners (I call it: un-faker) all over the planet. It totally pisses me off, that I can never ever remember any of those f*ckin’ providers. That’s (I guess) the main reason, why I simply never used such URL redirectors.

So, I started my very own URL (un)faker. The site’s ready, the database is set up. You may shorten, fake and post your URLs now using:

Enjoy! 😉

The last few days, I spent a lot of time, trying to optimize my websites for the Googlebot.

We all know, Google (as an empire, worth billions and billions of Dollars) is the leading website for searching and finding information throughout the world wide web. That’s okay. I, as a user, really like Google. Most of the time, I do find any information with Google. So, it’s worth its status.

As a maintainer of various websites, Google really stresses me out. It’s quite easy to announce a website. It also ain’t no problem to set up a Google account, use the webmaster tools, transmit a sitemap (or even more) and play with the settings.

I truely like the algorithm how Google works. PageRanking is no easy thing and Google does a great job. For me, as maintainer of very little websites, it’s unfortunately very hard, to get a high ranking. I’m quite often on the very first page of search responses – as long as the searches are explicit enough. To get a higher ranking for less specific searches, I would need lots of websites, linking back to mine. Okay, that’s the way how Google works. A link to website X on website Y is assumed to be a voting of website Y for website X. It’s a long way to manipulate that.

Whilst checking the raking and indexed pages of my sites nearly every day, I discovered a few things which really stresses me out:

1. Googlebot-Images Two images of one of my websites are in the index of Google images. These images are very old, probably indexed November 2007. The last few days, I studied lots and lots of websites and blogs and discovered: you nearly have no chance to manipulate the Googlebot-Images. Lucky you, if he comes around. Some people on some websites stated, that it may take up to 24 month to get your images indexed.

What a pitty.

2. dynamically generated websites

Once you have a website which serves it’s content dynamically, you either need Google to behave just like a real user and click from one page to another until Google indexed all your pages or you need to submit sitemaps which are also dynamically generated.

And that’s, what I did the last few days. I wrote quite simple scripts, which (depending on the type of website) fetches URLs from the database and generates a listing of links. Two such types are WordPress blog and PixelPost photoblog.

Another example: Gallery2. Unfortunately, I found no easy way to extract the links from the database, but Gallery2 comes with a built-in sitemap.

But here comes the next thing, which stresses me out: about 4 days ago, I submitted a Gallery2 sitemap with more than 900 URLs. Until today, only 220 URLs have been indexed.

As fast as Google’s searchengine works, so slow is indexing.

3. sitelinks

Sitelinks are a very nice idea and look quite impressive. But how to get sitelinks for your websites? Google says, there’s an algorithm, which calculates whether or not sitelinks would help users and automatically decides if sitelinks a generated. But until today, I found no information about how this algorithm works.

Damn, I’d love to have sitelinks.

Conclusion: the best and easiest way to get your websites on top of search results is to have them indexed (and be patient with that), to have specific content on your site and to rely on good search strings of interested users.

Good night so far…

Inspired by a co-worker and good friend of mine (http://www.oliver-schaef.de/), I set up a photoblog last night. It’s base system is http://www.pixelpost.org/. I installed a theme, various addons and reconfigured some PHP and HTML files to make it fit my needs.

This photoblog “photo art” is now accessible via http://photoart.thomasgericke.de/ or even http://www.thomasgericke.de/v4/interactive/pixelpost/ – it makes nearly no difference 🙂

http://photoart.thomasgericke.de/

Hopefully, I will manage it to upload a few more pictures and even more hopefully, I will take more interesting photos in the future.